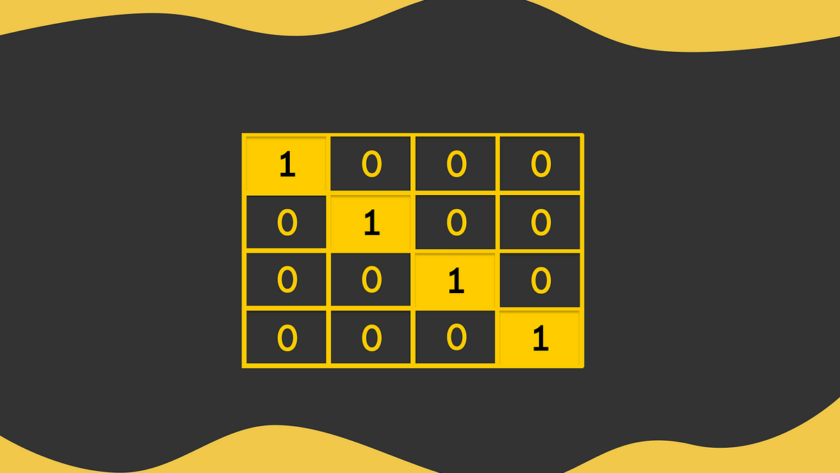

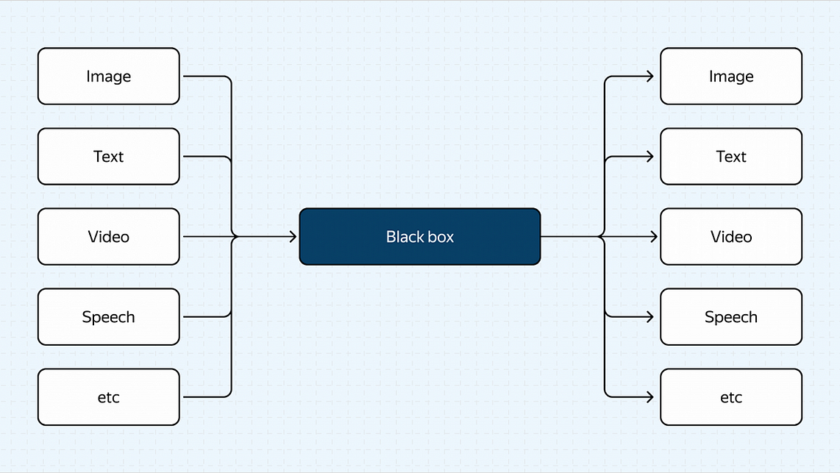

Learning to transform categorical data into a format that a machine learning model can understand When studying machine learning, it is essential to understand the inner workings of the most basic algorithms. Doing so helps in understanding how algorithms operate in popular libraries and frameworks, how to debug them, choose better hyperparameters more easily, and…