Survival Analysis is a statistical approach used to answer the question: “How long will something last?” That “something” could range from a patient’s lifespan to the durability of a machine…

LLMs have made significant strides in language-related tasks such as conversational AI, reasoning, and code generation. However, human communication extends beyond text, often incorporating visual elements to enhance understanding. To…

We’ve seen developers doing amazing things with Gemini 2.5 Pro, so we decided to release an updated version a couple of weeks early to get into developers hands sooner. Today…

It’s well known that what we eat matters — but what if when and how often we eat matters just as much?

In the midst of ongoing scientific debate around the benefits of intermittent fasting, this question…

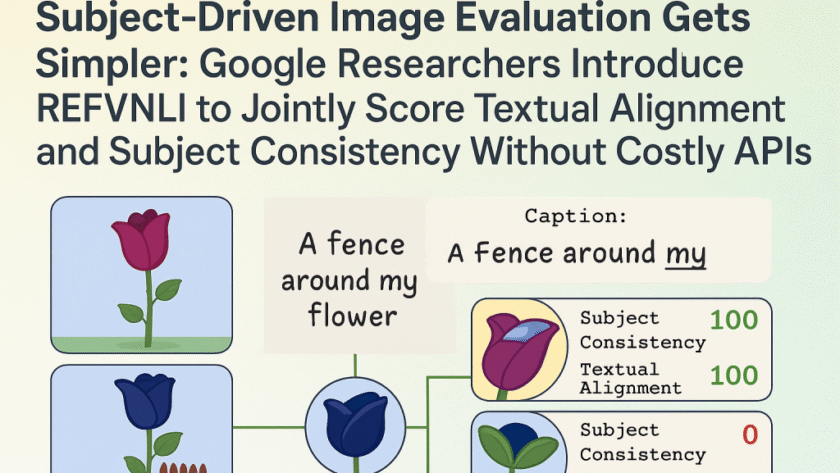

Text-to-image (T2I) generation has evolved to include subject-driven approaches, which enhance standard T2I models by incorporating reference images alongside text prompts. This advancement allows for more precise subject representation in…

Sharing DolphinGemma with the research community Recognizing the value of collaboration in scientific discovery, we’re planning to share DolphinGemma as an open model this summer. While trained on Atlantic spotted…

Why you should read this

As someone who did a Bachelors in Mathematics I was first introduced to L¹ and L² as a measure of Distance… now it seems to be…

Video captioning models are typically trained on datasets consisting of short videos, usually under three minutes in length, paired with corresponding captions. While this enables them to describe basic actions…

Music AI Sandbox was developed by Adam Roberts, Amy Stuart, Ari Troper, Beat Gfeller, Chris Deaner, Chris Reardon, Colin McArdell, DY Kim, Ethan Manilow, Felix Riedel, George Brower, Hema Manickavasagam,…

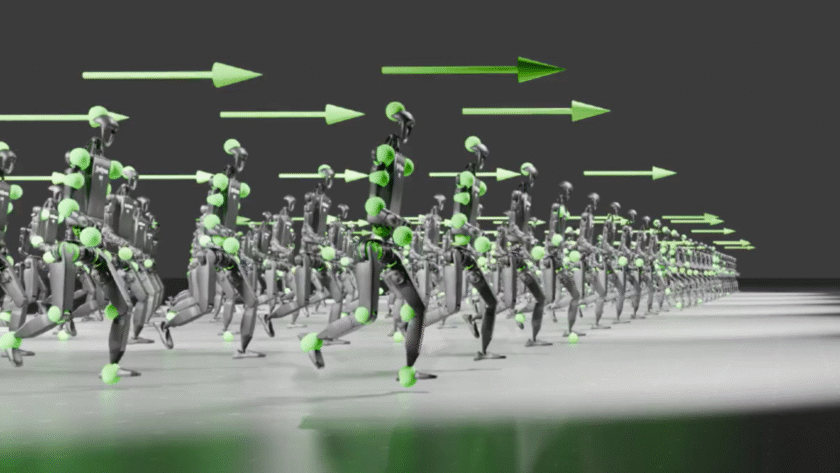

The future of robotics has advanced significantly. For many years, there have been expectations of human-like robots that can navigate our environments, perform complex tasks, and work alongside humans. Examples…