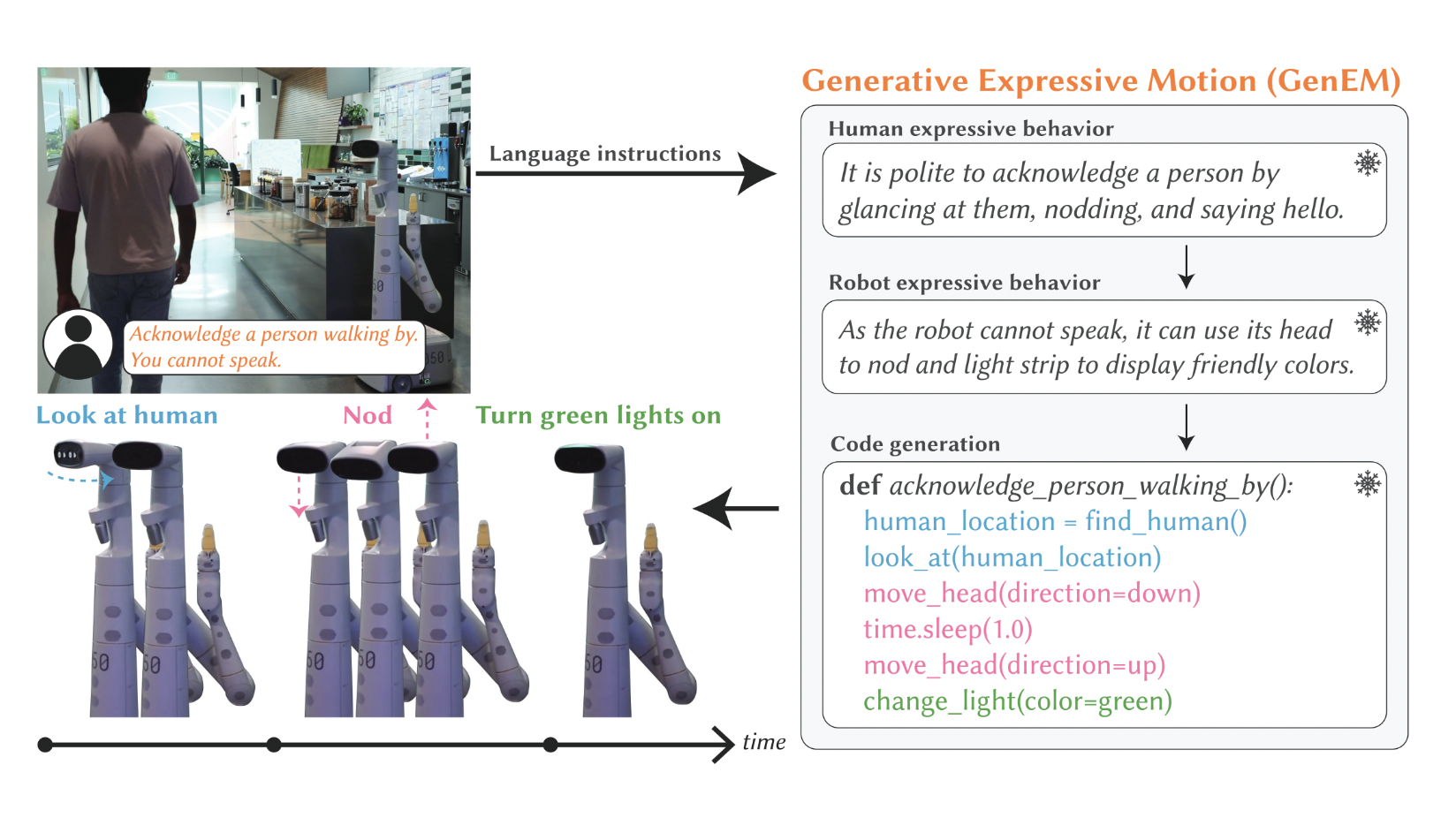

Numerous challenges underlying human-robot interaction exist. One such challenge is enabling robots to display human-like expressive behaviors. Traditional rule-based methods need more scalability in new social contexts, while the need for extensive, specific datasets limits data-driven approaches. This limitation becomes pronounced as the variety of social interactions a robot might encounter increases, creating a demand…