Image by storyset on Freepik

It's a great time to break into data engineering. So where do you start?

Learning data engineering can sometimes feel overwhelming because of the number of tools that you need to know, not to mention the super intimidating job descriptions!

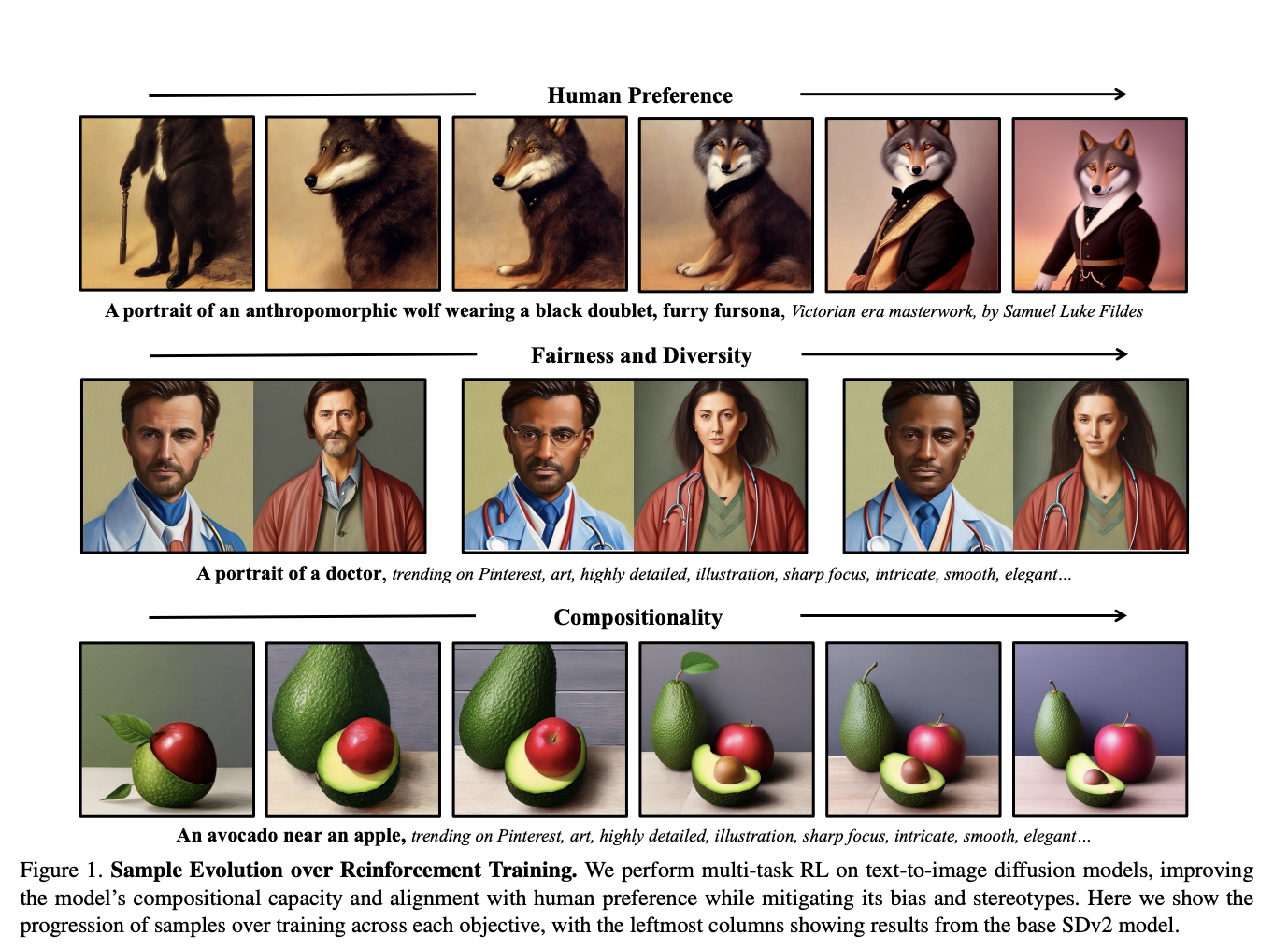

So if you are looking for a beginner-friendly…