In today's fast-paced business environment, efficient management of accounts receivable (AR) and accounts payable (AP) is crucial for maintaining a healthy cash flow. Invoices are an essential part of this. Invoice creation and Invoice processing are critical steps in these processes. NetSuite's robust invoice management system offers a powerful solution to automate and streamline the…

QuickBooks is one of today’s top accounting and bookkeeping platforms, and its reputation is well-deserved. Dominant across multiple industries and popular with most business sizes, from freelance solopreneurs to enterprise megaliths, QuickBooks covers most common accounting bases and is a well-rounded, general-purpose tool that legions of clients and fans enjoy. However, though QuickBooks enjoys name…

Introduction to Bank Reconciliation Journal Entries Bank reconciliation is an important process in accounting that ensures the accuracy and integrity of a company's financial records. It involves the comparison between the company’s internal financial records and those of the bank. At the heart of this reconciliation lies the creation of journal entries, which serve to…

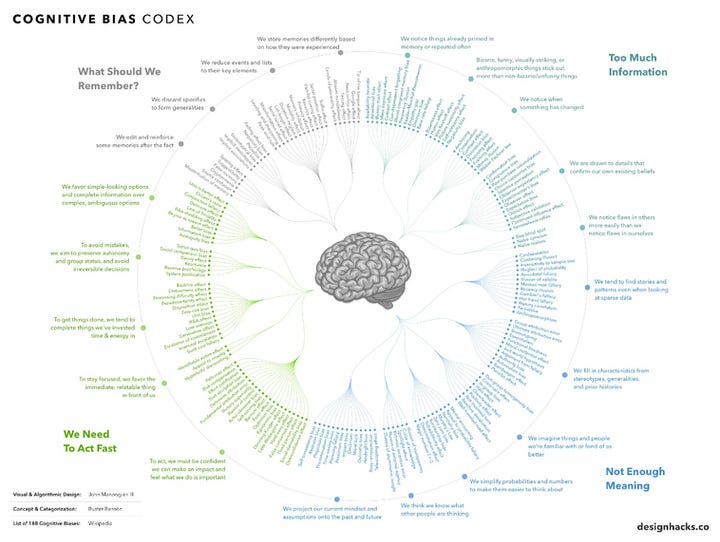

Why Bias in LLMs is Unavoidable Originally Published on my Substack Bias has something profound to teach us about the human condition, which relates to attention. We all have a limited amount of attention and give our attention to the things we value. But how do we know what objects to give our attention to?…

Artificial intelligence (AI) is changing how employees work in many industries, making tasks easier and faster. Over the years, it has grown from machines doing simple, repetitive tasks to understanding and predicting human needs.

While it’s true that AI brings some challenges to the workplace, it also creates new opportunities for businesses and workers.…

As one of the premiere ERP solutions for growing businesses, Sage Intacct has become the go-to for leaders looking to scale. With AI-driven accounting capabilities, HR modules, payroll features, and a wide range of integrations, Sage Intacct supports businesses' needs today while helping them prepare for the future. On its own, Sage Intacct is a…

Image by Author

When you decided to become a data professional, you were aware of one thing: learning never stops. It can be hard to keep up with learning new things in the market or upskilling to ensure you remain competitive.

KDnuggets are here to help you with that journey.

We want to introduce…

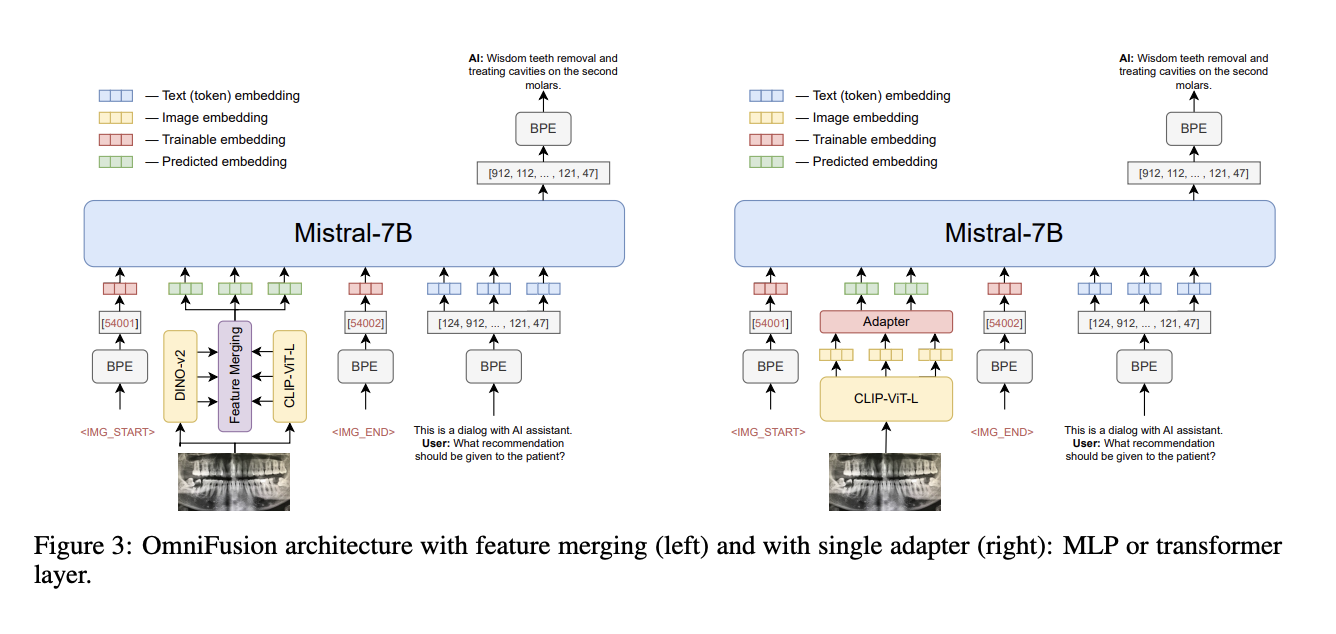

Multimodal architectures are revolutionizing the way systems process and interpret complex data. These advanced architectures facilitate simultaneous analysis of diverse data types such as text and images, broadening AI’s capabilities to mirror human cognitive functions more accurately. The seamless integration of these modalities is crucial for developing more intuitive and responsive AI systems that can…

Sports Analytics Which players could help Fulham overcome their major flaws? Photo by Mario Klassen on UnsplashSome days ago, I was fortunate to be able to participate in a football analytics hackathon that was organized by xfb Analytics[1], Transfermarkt[2], and Football Forum Hungary[3]. As we recently received permissions to share our work, I decided to…

What is a Bank Reconciliation Statement Bank reconciliation is the process that ensures that a company's recorded cash balances align with the funds in their bank accounts. A Bank Reconciliation Statement is a financial document that ensures that the cash balances recorded in the internal financial records align with the financial records presented in the…