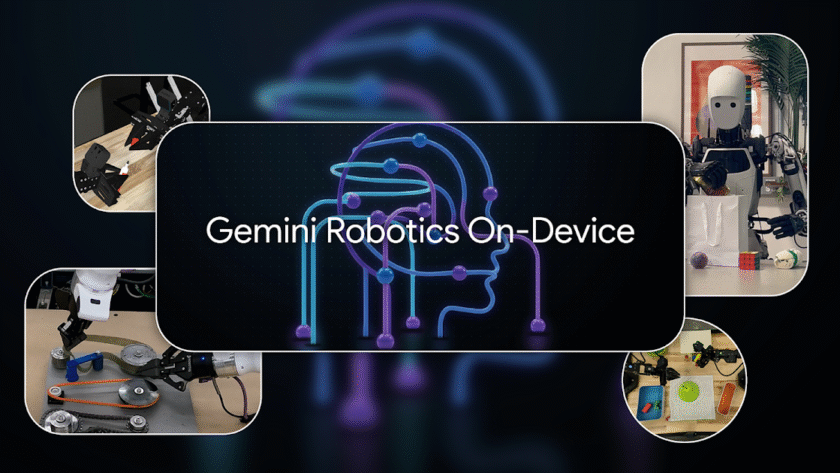

Bridging Perception and Action in Robotics

Multimodal Large Language Models (MLLMs) hold promise for enabling machines, such as robotic arms and legged robots, to perceive their surroundings, interpret scenarios, and take meaningful actions. The integration of such intelligence into physical systems is advancing the field of robotics, pushing it toward autonomous machines that don’t just…