Image by Author

# Introduction

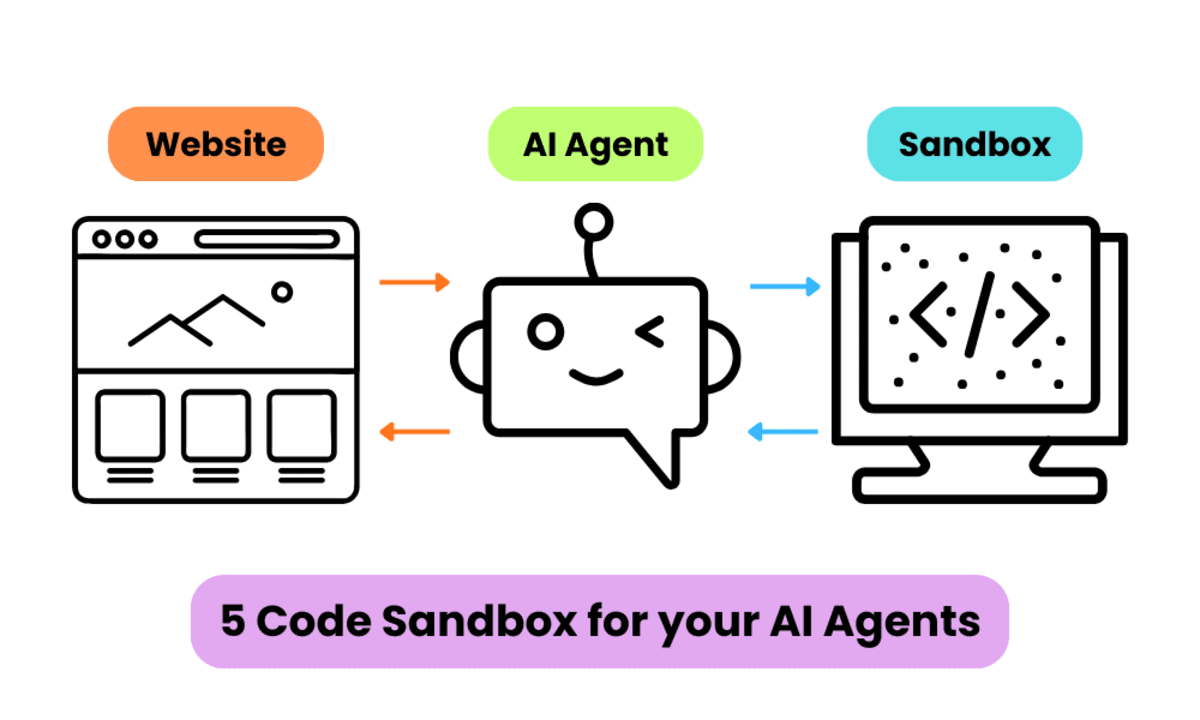

When you start letting AI agents write and run code, the first critical question is: where can that code execute safely?

Running LLM‑generated code directly on your application servers is risky. It can leak secrets, consume too many resources, or even break important systems, whether by accident or intent. That’s why agent‑native code sandboxes have quickly become essential parts of modern AI architecture.

With a sandbox, your agent can build, test, and debug code in a fully isolated environment. Once everything works, the agent can generate a pull request for you to review and merge. You get clean, functional code, without worrying about untrusted execution touching your real infrastructure.

In this post, we will explore five leading code sandbox platforms designed specifically for AI agents:

- Modal

- Blaxel

- Daytona

- E2B

- Together Code Sandbox

# 1. Modal: Serverless AI Compute with Agent-Friendly Sandboxes

Modal is a serverless platform for AI and data teams. You define your workloads as code, and Modal runs them on CPU or GPU infrastructure, scaling up and down as needed.

One of its key features for agents is sandboxes: secure, ephemeral environments for running untrusted code. These sandboxes can be launched programmatically, given a time-to-live, and torn down automatically when idle.

What Modal gives your agents:

- Serverless containers for Python-first AI workloads, from data pipelines to LLM inference

- Sandboxed code execution so agents can compile and run code in isolated containers rather than on your main app infrastructure

- Everything-as-code mindset which fits nicely with agent workflows that generate infra and pipelines dynamically

# 2. Blaxel: The Perpetual Sandbox Platform

Blaxel is an infrastructure platform that gives production-grade agents their own compute environments, including code sandboxes, tool servers, and LLMs.

Blaxel’s Sandboxes are designed specifically for agentic workloads: secure micro-VMs that spin up quickly, scale to zero when idle, and resume within roughly 25 ms even after weeks.

What Blaxel gives your agents:

- Secure, instant-launching micro-VMs for running AI-generated code with full file system and process access

- Scale-to-zero with fast resume, so your long-lived agents can “sleep” without burning money, yet still feel stateful

- SDKs and tools (CLI, GitHub integration, Python SDK) to deploy agents and hook into Blaxel resources like tool servers and batch jobs

# 3. Daytona: Run AI Code

Daytona started as a cloud-native dev environment, then pivoted into secure infrastructure for running AI-generated code. It offers stateful, elastic sandboxes designed to be used primarily by AI agents rather than humans.

Daytona focuses on fast creation of sandboxes: sub-90 ms from “code to execution” in their marketing materials, with some sources describing secure, elastic runtimes spinning up in around 27 ms.

What Daytona gives your agents:

- Lightning‑fast, stateful sandboxes built for continuous agent workflows

- Secure, isolated runtimes, using Docker by default with support for stronger isolation layers like Kata Containers and Sysbox

- Full programmatic control over file operations, Git, LSP, and code execution via a clean, agent‑friendly SDK

# 4. E2B: Sandbox for Computer Use Agents

E2B describes itself as cloud infrastructure for AI agents, offering secure isolated sandboxes in the cloud that you control via Python and JavaScript SDKs

A lot of people know E2B from their Code Interpreter Sandbox: a way to give your app a code-running runtime similar in spirit to “Code Interpreter,” but under your control and tuned for agent workflows.

What E2B gives your agents:

- Open-source, sandboxed cloud environments for AI agents and AI-powered apps.

- Code Interpreter-style runtime for Python and JS/TS, exposed through SDKs and CLI.

- Designed for data analysis, visualization, codegen evals, and full AI-generated apps that need a secure execution layer.

# 5. Together Code Sandbox: MicroVMs for AI Coding Products

Together AI is known for its AI-native cloud: open and specialized models, inference, and GPU clusters. On top of that they launched Together Code Sandbox, a microVM-based environment for building AI coding tools at scale.

Together Code Sandbox provides fast, secure code sandboxes for creating full‑scale development environments purpose‑built for AI. It gives teams configurable microVMs with rapid startup times, robust snapshotting, and mature dev‑environment tooling. Developers use it to power next‑gen AI coding tools and agentic workflows on top of a scalable, high‑performance infrastructure.

What Together Code Sandbox gives your agents:

- Instant VM creation from a snapshot in ~500 ms and provision new ones from scratch in under 2.7 seconds (P95)

- Scale from 2 to 64 vCPUs and 1 to 128 GB RAM, with hot‑swappable sizing for compute‑intensive workloads

- Deep integration with Together’s model library and AI-native cloud, so your agents can both generate and execute code on the same platform

# How to Choose the Right Code Sandbox for Your AI Agents

All five options give agents a safe, isolated place to run code. Pick based on what you are optimizing for:

- Modal: Python-first platform for pipelines, batch jobs, training/inference, and sandboxed execution in one place.

- Blaxel / Daytona: Agent-native sandboxes that spin up fast and can persist like a real workspace.

- E2B: Code-interpreter style execution with strong JS + Python SDKs and open-source roots.

- Together Code Sandbox: Best fit if you are building serious AI coding products and already run on Together’s infra.

Abid Ali Awan (@1abidaliawan) is a certified data scientist professional who loves building machine learning models. Currently, he is focusing on content creation and writing technical blogs on machine learning and data science technologies. Abid holds a Master’s degree in technology management and a bachelor’s degree in telecommunication engineering. His vision is to build an AI product using a graph neural network for students struggling with mental illness.