Image by Author | Canva

“AI agents will become an integral part of our daily lives, helping us with everything from scheduling appointments to managing our finances. They will make our lives more convenient and efficient.”

—Andrew Ng

After the growing popularity of large language models (LLMs), the next big thing is AI Agents. As Andrew Ng has said, they will become a part of our daily lives, but how will this affect analytical workflows? Can this be the end of manual data analytics, or enhance the existing workflow?

In this article, we tried to find out the answer to this question and analyze the timeline to see whether it is too early to do this or too late.

The past of Data Analytics

Data Analytics was not as easy or fast as it is today. In fact, it went through several different phases. It is shaped by the technology of its time and the growing demand for data-driven decision-making from companies and individuals.

The Dominance of Microsoft Excel

In the 90s and early 2000s, we used Microsoft Excel for everything. Remember those school assignments or tasks in your workplace. You had to combine columns and sort them by writing long formulas. There are not too many sources where you can learn them, so courses are very popular.

Large datasets would slow this process down, and building a report was manual and repetitive.

The Rise of SQL, Python, R

Eventually, Excel started to fall short. Here, SQL stepped in. And it has been the rockstar ever since. It is structured, scalable, and fast. You probably remember the first time you used SQL; in seconds, it did the analysis.

R was there, but with the growth of Python, it has also been enhanced. Python is like talking with data because of its syntax. Now the complex tasks could be done in minutes. Companies also noticed this, and everyone was looking for talent that could work with SQL, Python, and R. This was the new standard.

BI Dashboards Everywhere

After 2018, a new shift happened. Tools like Tableau and Power BI do data analysis by just clicking, and they offer amazing visualizations at once, called dashboards. These no-code tools have become popular so fast, and all companies are now changing their job descriptions.

PowerBI or Tableau experiences are a must!

The Future: Entrance of LLMs

Then, large language models enter the scene, and what an entrance it was! Everyone is talking about the LLMs and trying to integrate them into their workflow. You can see the article titles too often, “will LLMs replace data analysts?”.

However, the first versions of LLMs could not offer automated data analysis until the ChatGPT Code Interpreter came along. This was the game-changer that scared data analysts the most, because it started to show that data analytics workflows could possibly be automated with just a click. How? Let’s see.

Data Exploration with LLMs

Consider this data project: Black Friday purchases. It has been used as a take-home assignment in the recruitment process for the data science position at Walmart.

Here is the link to this data project: https://platform.stratascratch.com/data-projects/black-friday-purchases

Visit, download the dataset, and upload it to ChatGPT. Use this prompt structure:

I have attached my dataset.

Here is my dataset description:

[Copy-paste from the platform]

Perform data exploration using visuals.

Here is the output’s first part.

But it has not finished yet. It continues, so let’s see what else it has to show us.

Now we have an overall summary of the dataset and visualizations. Let’s look at the third part of the data exploration, which is now verbal.

The best part? It did all of this in seconds. But AI agents are a little bit more advanced than this. So, let’s build an AI agent that automates data exploration.

Data Analytics Agents

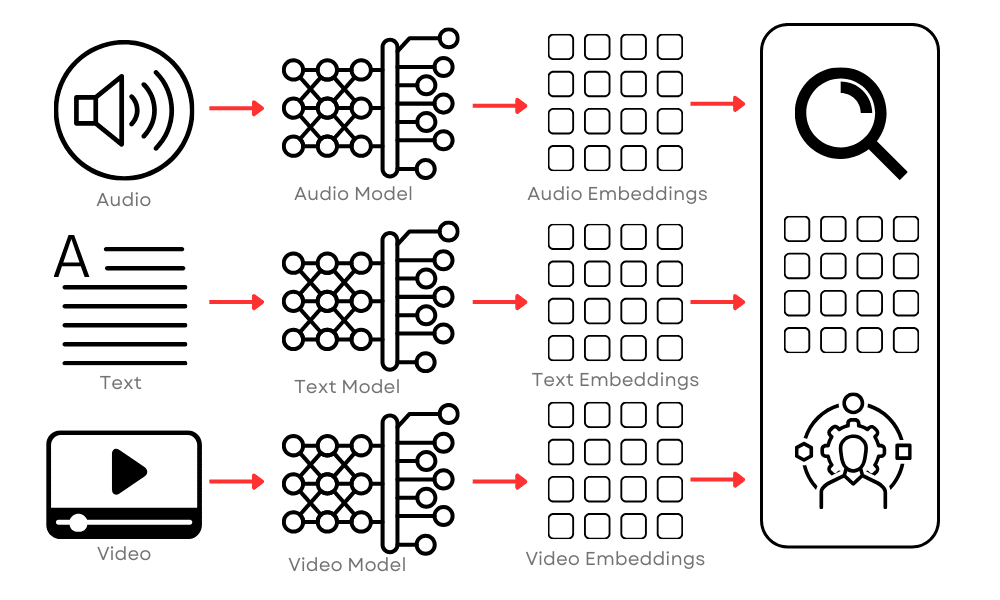

The agents went one step further than traditional LLM interaction. As powerful as these LLMs were, it felt like something was missing. Or is it just an inevitable urge for humanity to discover an intelligence that exceeds their own? For LLMs, you had to prompt them as we did above, but for data analytics agents, they don’t even need human intervention. They will do everything themselves.

Data Exploration and Visualization Agent Implementation

Let’s build an agent together. To do that, we will use Langchain and Streamlit.

Setting up the Agent

First, let’s install all the libraries.

import streamlit as st

import pandas as pd

warnings.filterwarnings('ignore')

from langchain_experimental.agents.agent_toolkits import create_pandas_dataframe_agent

from langchain_openai import ChatOpenAI

from langchain.agents.agent_types import AgentType

import io

import warnings

import matplotlib.pyplot as plt

import seaborn as sns

Our Streamlit agent lets you upload a CSV or Excel file with this code.

api_key = "api-key-here"

st.set_page_config(page_title="Agentic Data Explorer", layout="wide")

st.title("Chat With Your Data — Agent + Visual Insights")

uploaded_file = st.file_uploader("Upload your CSV or Excel file", type=["csv", "xlsx"])

if uploaded_file:

# Read file

if uploaded_file.name.endswith(".csv"):

df = pd.read_csv(uploaded_file)

elif uploaded_file.name.endswith(".xlsx"):

df = pd.read_excel(uploaded_file)

Next, the data exploration and data visualization codes come in. As you can see, there are some if blocks that will apply your code based on the characteristics of the uploaded datasets.

# --- Basic Exploration ---

st.subheader("📌 Data Preview")

st.dataframe(df.head())

st.subheader("🔎 Basic Statistics")

st.dataframe(df.describe())

st.subheader("📋 Column Info")

buffer = io.StringIO()

df.info(buf=buffer)

st.text(buffer.getvalue())

# --- Auto Visualizations ---

st.subheader("📊 Auto Visualizations (Top 2 Columns)")

numeric_cols = df.select_dtypes(include=["int64", "float64"]).columns.tolist()

categorical_cols = df.select_dtypes(include=["object", "category"]).columns.tolist()

if numeric_cols:

col = numeric_cols[0]

st.markdown(f"### Histogram for `{col}`")

fig, ax = plt.subplots()

sns.histplot(df[col].dropna(), kde=True, ax=ax)

st.pyplot(fig)

if categorical_cols:

# Limiting to the top 15 categories by count

top_cats = df[col].value_counts().head(15)

st.markdown(f"### Top 15 Categories in `{col}`")

fig, ax = plt.subplots()

top_cats.plot(kind='bar', ax=ax)

plt.xticks(rotation=45, ha="right")

st.pyplot(fig)

Next, set up an agent.

st.divider()

st.subheader("🧠 Ask Anything to Your Data (Agent)")

prompt = st.text_input("Try: 'Which category has the highest average sales?'")

if prompt:

agent = create_pandas_dataframe_agent(

ChatOpenAI(

temperature=0,

model="gpt-3.5-turbo", # Or "gpt-4" if you have access

api_key=api_key

),

df,

verbose=True,

agent_type=AgentType.OPENAI_FUNCTIONS,

**{"allow_dangerous_code": True}

)

with st.spinner("Agent is thinking..."):

response = agent.invoke(prompt)

st.success("✅ Answer:")

st.markdown(f"> {response['output']}")

Testing The Agent

Now everything is ready. Save it as:

Next, go to the working directory of this script file, and run it using this code:

And, voila!

Your agent is ready, let’s test it!

Final Thoughts

In this article, we have analyzed the data analytics evolution starting in the 90s to today, from Excel to LLM agents. We have analyzed this real-life dataset, which was asked about in an actual data science job interview, by using ChatGPT.

Finally, we have developed an agent that automates data exploration and data visualization by using Streamlit, Langchain, and other Python libraries, which is an intersection of past and new data analytics workflow. And we did everything by using a real-life data project.

Whether you adopt them today or tomorrow, AI agents are no longer a future trend; in fact, they’re the next phase of analytics.

Nate Rosidi is a data scientist and in product strategy. He’s also an adjunct professor teaching analytics, and is the founder of StrataScratch, a platform helping data scientists prepare for their interviews with real interview questions from top companies. Nate writes on the latest trends in the career market, gives interview advice, shares data science projects, and covers everything SQL.